UCB CS294/194-196 Large Language Model Agents

Course Structure and Content:

- Foundations of LLMs: The course begins by discussing the fundamental concepts underlying LLMs, including their architectures and training methodologies.

- Essential Abilities for Task Automation: Students will delve into the reasoning and planning capabilities of LLMs, focusing on how these models can utilize tools and perform complex tasks autonomously.

- Agent Development Infrastructure: The curriculum covers the infrastructures required for developing LLM agents, such as retrieval-augmented generation techniques and agentic AI frameworks.

- Application Domains:

- Code Generation and Data Science: Exploration of how LLMs can assist in software development and data analysis tasks. • Multimodal Agents and Robotics: Investigation into LLMs’ capabilities in processing and integrating multiple data modalities, including their applications in robotics.

- Web Automation and Scientific Discovery: Study of LLM agents’ roles in automating web interactions and contributing to scientific research.

- Evaluation and Benchmarking: The course emphasizes the importance of assessing LLM agents’ performance through rigorous evaluation and benchmarking methodologies.

- Privacy, Safety, and Ethics: Discussions on the limitations, potential risks, and ethical considerations associated with current LLM agents, along with insights into directions for further improvement.

- Human-Agent Interaction and Alignment: Examination of how LLM agents interact with humans, focusing on personalization and alignment with user intentions.

- Multi-Agent Collaboration: Exploration of scenarios where multiple LLM agents collaborate to achieve complex objectives.

Lecture 01: LLM Reasoning

AI should be able to learn from just a few examples, like what humans usually do. Huamns can learn from just a few examples because humans can reason

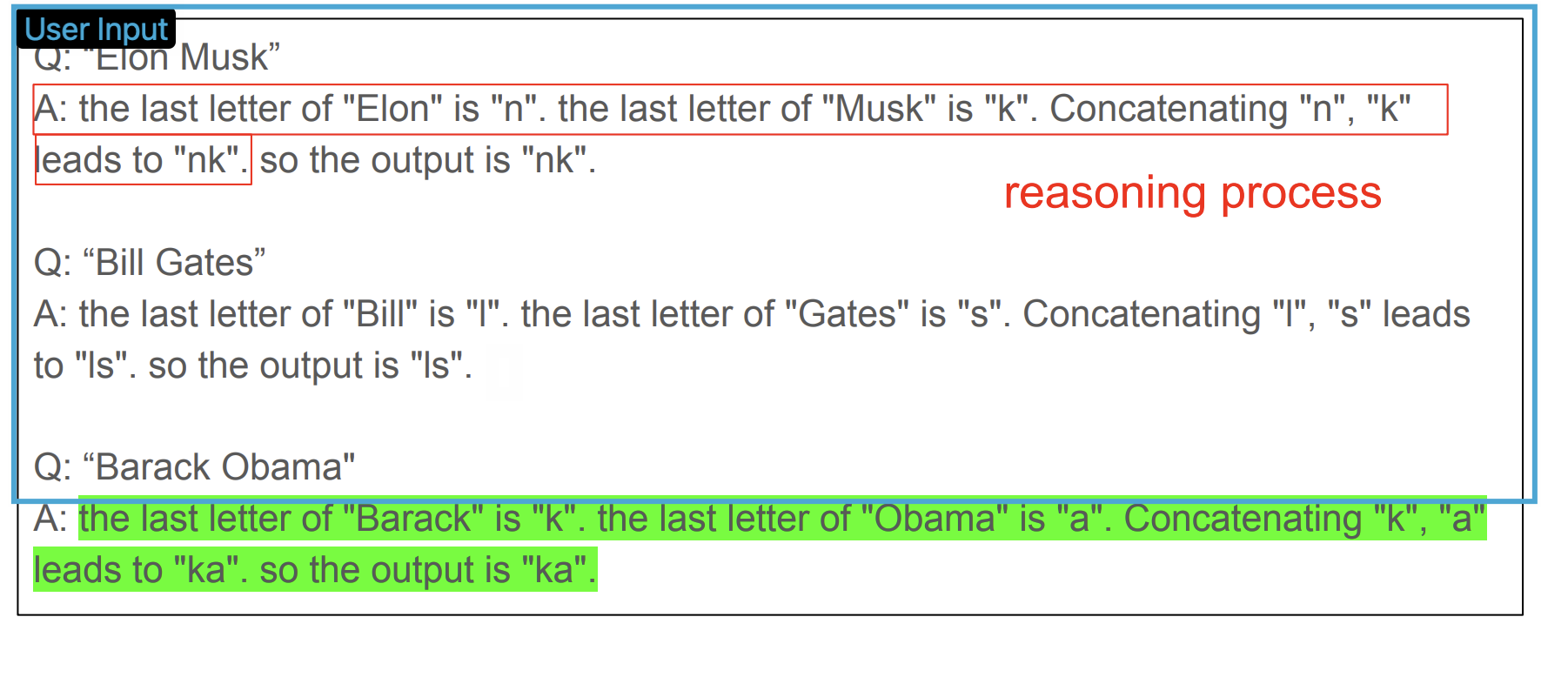

The basic LLM is just parrot mimic human languages. We can add a reasoning process before get answer. For example:

The reasoning process can be see as intermediate steps before get answer. So, we can conclude see that:

Key Idea:

Derive the Final Answer through Intermediate Steps

How we can add those property?

We need,

- Training with intermediate steps

- Filetuning with intermediate steps

- Prompting with intermediate steps

with curated dataset, for example, GSM8K (Cobbe et al., n.d.). One of the example data is:

{"question": "Natalia sold clips to 48 of her friends in April, and then she sold half as many clips in May. How many clips did Natalia sell altogether in April and May?",

"answer": "Natalia sold 48/2 = <<48/2=24>>24 clips in May.\nNatalia sold 48+24 = <<48+24=72>>72 clips altogether in April and May.\n#### 72"}BUT, why intermediate steps are helpful?

One of the theory proposed by (Li et al., n.d.) is that:

- Constant-depth transformers can solve any inherently serial problem as long as it generates sufficiently long intermediate reasoning steps

- Transformers which directly generate final answers either requires a huge depth to solve or cannot solve at all

that means:

- Generating more intermediate steps (think longer)

- Too long to generate? Calling external tools, e.g. MCTS

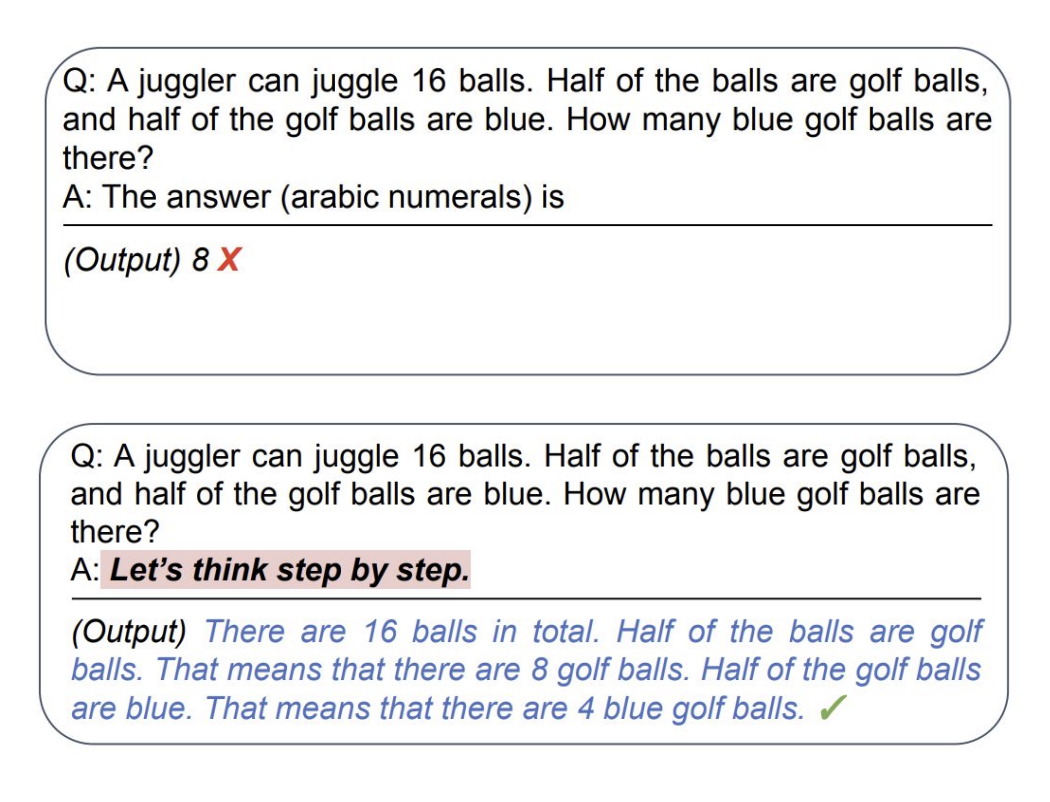

Further more, we can trigger step by step reasoning without using demonstrate examples:

by just add prompt let's think step by step

Howevery, this zero-shot(not provide any example) is worse than few-shot learning(provide several examples). How can we improve the zero-shot learning ability. One of the good discover is that LLMs are analogical reasoners (Yasunaga et al., n.d.). Which mean:

adaptively generate relevant examples and knowledge, rather than just using a fix set of examples

What more? Well, we are so lazy, is there any method that we can not even pass let's think step by step prompts? (chai?) propose a chain-of-thought decoding, which enable LLM do the reasoning process without explicit prompts.

One notice is that, we don’t want intermediate steps, what we want is just the final answer. How we can utilise the reason process to get better answer. One basic idea is we merge all the intermediate steps. This is call Self-Consistency (10.48550/arXiv.2203.11171?), which get the most highest probability answer,

So far, we talk good side about the LLM. what is the silver side? Well, LLM is easily distracted by irrelevant context. And it cannot Self-Correct Reasoning.